In the fast-paced world of financial markets, speed is not just an advantage; it’s often the determining factor between profit and loss. Trading Latency, the seemingly simple concept of delay between deciding to place a trade and that trade actually being executed on an exchange, has become one of the most critical battlegrounds for market participants. For decades, there has been an relentless drive to reduce this delay, leading to a phenomenon we can accurately describe as Exponential Latency Compression. This isn’t just about getting faster; it’s about a fundamental transformation in how trading is conducted, who succeeds, and the very structure of the markets themselves

Table of Contents

- Trading Latency: The Race Against Time

- The Evolution of Trading Speed: From Seconds to Milliseconds

- Entering the Microsecond Era: Co-location and the Rise of HFT

- Pushing the Boundaries: Ultra-Low Latency (ULL) and the Nanosecond Frontier

- Technological Drivers of Latency Reduction

- Impact on Market Structure and Algorithmic Trading

- Challenges, Criticisms, and the Future of Latency Compression

This article will take you through the incredible journey of trading speed, from the age of seconds to the frontier of nanoseconds, exploring the technological leaps, the strategies they enabled, and the profound impact on the financial landscape.

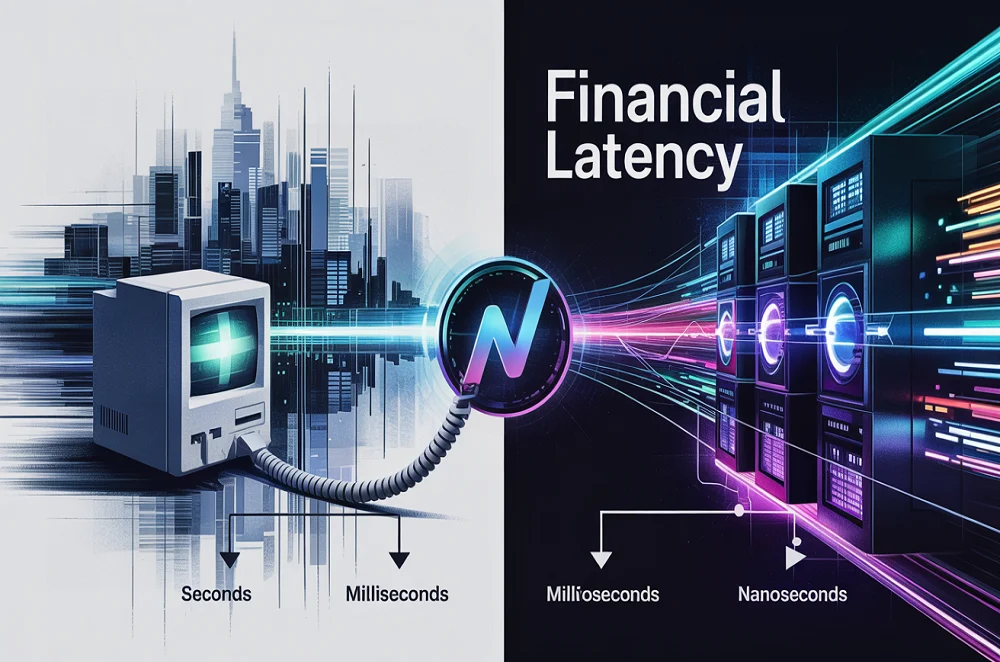

The Evolution of Trading Speed: From Seconds to Milliseconds

Imagine a time when placing a stock order involved calling a broker or, in the early days of electronic trading, relying on connections that were akin to the dial-up internet of the 1990s. In this era, Trading Latency was measured in a timeframe we would now consider astronomical: seconds.

- Early Electronic Trading: While a step up from purely manual systems, these early platforms were limited by the available technology.

- Dial-Up Connections: The internet infrastructure itself was slow and inconsistent. Data packets took significant time to travel from a trader’s computer to the exchange servers.

- Human Element: Despite electronic interfaces, human interaction (like a broker confirming an order) often added to the overall delay.

Impact: This level of latency meant that trading strategies were inherently slower. Large, long-term investments or strategies that didn’t rely on split-second timing were prevalent. The idea of rapidly trading in and out of positions based on tiny price movements was simply not feasible for most.

The early 2000s brought a significant technological revolution to the masses: Broadband Internet.

Increased Bandwidth: Broadband offered dramatically higher data transfer rates compared to dial-up.

Reduced Jitter and Delay: Connections became more stable and predictable, significantly cutting down the time it took for an order to travel.

Impact: This ushered in the age of milliseconds latency. While still slow by today’s standards (a millisecond is one-thousandth of a second), it was a game-changer.

This reduction to milliseconds had a profound effect:

- Rise of Retail Electronic Trading: Trading platforms became more accessible and responsive for individual investors.

- Enabled Early Algorithmic Trading: Complex automated trading strategies that reacted to market data started to become viable.

These early algorithms could analyze data and send orders faster than a human, but they were still limited by the millisecond barrier. Strategies might focus on executing larger orders efficiently or taking advantage of price differences that persisted for several milliseconds.

This period set the stage for the next, even more disruptive leap in speed.

Join The Quantitative Elite Community here: The Quantitative Elite on Skool

Entering the Microsecond Era: Co-location and the Rise of HFT

While milliseconds enabled automation, the true explosion in speed and its impact came with the drive to reduce latency further – into the realm of microseconds (millionths of a second). This massive leap was driven by both technological innovation and a fundamental shift in physical infrastructure.

The most significant development was Co-location.

What is Co-location? Instead of sending orders from an office located anywhere in the world (or even across the country) to an exchange’s data center, trading firms began placing their servers and networking equipment physically inside or immediately adjacent to the exchange’s own data center.

Why is Proximity Key? Data travels at the speed of light (or slightly slower through fiber optic cables). Even a few miles difference can add crucial microseconds of delay. By being physically closer to the exchange’s matching engine (where buy and sell orders are matched), a firm’s orders and market data can travel back and forth much faster than those of a firm located further away.

- Reduced Network Hops: Co-location minimizes the number of routers and network infrastructure the data has to pass through, each of which introduces a small amount of delay.

- Dedicated Infrastructure: Firms often invest in their own high-speed fiber optic cables and specialized network equipment within the co-location facility, bypassing slower public internet infrastructure.

This move to Co-location effectively squeezed latency from milliseconds down to low single-digit microseconds for firms willing to invest heavily in proximity and technology. This speed advantage created fertile ground for a new, highly specialized form of trading: High-Frequency Trading (HFT).

High-Frequency Trading (HFT) is characterized by:

- Extremely Short Holding Periods: HFT firms hold positions for very brief durations – often fractions of a second.

- High Turnover: They execute a massive number of trades daily.

- Sophisticated Algorithms: Trading decisions are made entirely by complex computer programs reacting to real-time market data.

- Small Profits Per Trade: HFT strategies typically aim to make tiny profits on each individual trade, relying on the high volume of trades to generate significant overall returns.

- Sensitivity to Latency: The profitability of many HFT strategies is directly tied to being able to react to market changes or place orders microseconds faster than competitors.

Types of HFT strategies enabled by microsecond latency include:

- Statistical Arbitrage: Identifying tiny, temporary price discrepancies between highly correlated assets and trading them simultaneously to profit from the convergence. Being faster allows them to exploit these fleeting opportunities before others.

- Market Making: Providing liquidity by simultaneously placing buy and sell orders for a security. The speed allows them to update their quotes rapidly in response to market movements, managing risk and profiting from the bid-ask spread.

- Event Arbitrage: Trading rapidly around the release of economic data or news, trying to profit from the immediate price reaction. Speed in processing and acting on the data is paramount.

The advent of Co-location and High-Frequency Trading (HFT) in the mid-2000s marked a pivotal moment, making microsecond latency the new standard for competitive trading.

Pushing the Boundaries: Ultra-Low Latency (ULL) and the Nanosecond Frontier

While achieving microsecond speeds seemed incredibly fast, the competitive nature of HFT meant the race didn’t stop there. Firms continuously sought ways to shave off even the smallest fractions of a microsecond. This pursuit led to the era of Ultra-Low Latency (ULL) strategies.

Ultra-Low Latency (ULL) refers to trading operations pushing well below the typical microsecond range, aiming for single-digit microseconds and increasingly, towards nanoseconds (billionths of a second).

- Beyond Standard Servers: Achieving ULL requires moving beyond conventional server hardware.

Field-Programmable - Gate Arrays (FPGAs): These are specialized chips that can be programmed to perform specific tasks (like processing market data and generating orders) much faster and more efficiently than general-purpose CPUs. They execute operations in hardware logic rather than software instructions, drastically reducing processing time.

Optimized - Networking: Further optimization of the network path within the data center and between exchanges is crucial. This includes using faster switches and potentially even non-electronic data transmission methods for short distances.

- Cutting-Edge Software: While hardware is key, the software running on it must be incredibly efficient, minimizing processing cycles and overhead.

The move towards nanoseconds represents the current frontier of this speed race. A nanosecond is one-thousandth of a microsecond. The differences in speed at this level are often measured in the time it takes light to travel just a few feet.

- Physical Limits: At this scale, the fundamental speed of light becomes a significant constraint. Optimizing the physical layout of equipment within a data center to minimize cable length becomes critical.

- Technological Edge: Achieving nanosecond speed requires significant investment in the most advanced hardware and expert engineering talent.

- Competitive Advantage: Firms operating at nanosecond speeds have a measurable advantage over those only at low microseconds for certain strategies.

This push for Ultra-Low Latency (ULL) is not just about winning a race; it’s about being able to react to market events, process data, and place orders before almost anyone else. It allows strategies to profit from opportunities that exist for only the briefest of moments.

Technological Drivers of Latency Compression

The journey from seconds to nanoseconds wasn’t accidental. It was driven by continuous innovation across multiple technological fronts:

Network Infrastructure:

- Fiber Optics: Replacing copper cables with fiber dramatically increased data transmission speeds and bandwidth over long distances.

- Faster Switches and Routers: The equipment that directs data traffic has become exponentially faster and more efficient.

- Microwave and Laser Communication: For specific, high-priority links (often between data centers or exchanges), firms use microwave or even laser-based communication networks which can sometimes offer lower latency than fiber, especially over certain terrains.

Computing Hardware:

- Faster CPUs: General-purpose processors became much quicker over time, though their gains eventually became insufficient for the most demanding ULL needs.

- Specialized Processors (FPGAs, ASICs): As mentioned, FPGAs and Application-Specific Integrated Circuits (ASICs) offer hardware-level processing tailored for specific tasks, providing significant speed advantages over CPUs for latency-critical operations.

- Faster Memory and Storage: Quicker access to data stored in memory (RAM) and on storage devices (SSDs) reduces delays in data processing.

Software Optimization:

- Efficient Code: Writing trading algorithms and system software in highly optimized, low-level languages that minimize processing cycles.

- Kernel Bypass: Techniques that allow trading applications to interact directly with network hardware, bypassing the operating system’s networking stack to reduce overhead and delay.

- Physical Proximity (Co-location): As detailed earlier, the strategic placement of trading infrastructure directly addresses the latency introduced by physical distance and network hops.

These interconnected advancements have collectively fueled the Exponential Latency Compression, making speeds previously unimaginable a reality.

Impact on Market Structure and Algorithmic Trading

The dramatic reduction in Trading Latency has fundamentally reshaped financial markets and trading strategies.

- Dominance of Algorithmic Trading: The speed available has made manual or even slower automated trading less competitive for certain types of opportunities. Algorithmic Trading, driven by sophisticated computer models, now accounts for a vast majority of trading volume in many asset classes, particularly equities and futures.

- Increased Market Efficiency (to a degree): HFT and ULL strategies that exploit tiny price discrepancies can help to quickly correct minor mispricings across markets, contributing to a form of market efficiency.

- Tighter Spreads: Increased competition among speed-sensitive market makers has often led to narrower bid-ask spreads (the difference between the price a buyer is willing to pay and a seller is willing to accept). This can potentially lower transaction costs for other market participants, although the benefit is debated and depends on the market.

- Increased Market Fragmentation: To offer speed advantages, new trading venues (like dark pools or alternative trading systems) emerged, leading to liquidity being spread across multiple locations rather than consolidated on a single exchange. This complexity adds to the challenge of finding the best price quickly.

- New Risks and Volatility: The speed and interconnectedness enabled by low latency mean that market events (including errors or unexpected reactions by algorithms) can propagate and impact prices across markets extremely rapidly, potentially contributing to increased short-term volatility or events like “flash crashes.”

- Information Advantage: Firms with the fastest access to market data and the ability to trade on it first have a significant information advantage, even if it’s only by a few microseconds. This raises questions about market fairness and equality of access.

The landscape has shifted from one where analysis and fundamental insights drove trades over longer time horizons to one where processing speed and reaction time to fleeting opportunities or small price signals are paramount for certain strategies.

Challenges, Criticisms, and the Future of Latency Compression

While the technological achievement of Exponential Latency Compression is undeniable, it has not been without its challenges and criticisms.

- The Latency Arms Race: The pursuit of ever-lower latency is incredibly expensive, requiring continuous investment in cutting-edge hardware, network infrastructure, and highly specialized personnel. This creates an “arms race” where firms must spend simply to keep pace, potentially diverting resources that could be used elsewhere.

- Fairness and Equality: Critics argue that the ability to pay for co-location and the fastest technology creates an uneven playing field, giving an inherent advantage to large, well-funded institutions over smaller firms or individual investors who cannot afford such infrastructure.

- Operational Risks: The complexity of high-speed trading systems means that software bugs or hardware failures can have rapid and significant negative consequences on the market.

- Market Stability Concerns: As mentioned, the speed can amplify market movements and potentially contribute to instability, particularly during periods of stress or uncertainty.

- Diminishing Returns: As speeds approach the fundamental limits imposed by the speed of light, the cost and effort required to achieve even tiny reductions in latency increase dramatically.

- Regulatory Scrutiny: Regulators around the world have examined HFT and the impact of low latency, considering potential rules to address fairness, stability, and transparency concerns.

Despite these challenges, the drive for speed is unlikely to cease entirely. The financial incentives for achieving even marginal latency advantages remain powerful.

Looking ahead, the future of Ultra-Low Latency (ULL) trading and Latency Compression may involve:

- Further Hardware Innovation: Continued development of specialized processing units (FPGAs, ASICs) and potentially new computing paradigms.

- Novel Communication Methods: Exploring faster-than-fiber communication technologies for specific links.

- Focus on Determinism: Ensuring not just low latency, but predictable and consistent latency, which is crucial for algorithmic reliability.

- Regulatory Adjustments: Potential changes in market rules to address the impacts of high-speed trading.

The journey from seconds to nanoseconds in trading latency is a compelling story of technological progress and its transformative power. While it has brought increased market efficiency in some areas and enabled new forms of trading, it has also introduced complexities and challenges that market participants, regulators, and the public continue to grapple with. The pursuit of speed, driven by the quest for advantage, has forever changed the face of finance.

Grab your copy of Practical Python for Effective Algorithmic Trading here: Amazon – Practical Python for Effective Algorithmic Trading